Proper data handling for business success

Put your data in context

Information technology now permeates all segments of our lives, as business has largely moved online. This has been further fuelled by an epidemic that has shown in many ways how technology can help and improve the quality of our lives. We buy clothes, housewares, food, and even cars online, thereby buying leisure time that we can use for something smarter.

Competition in IT is increasing, the pace is dictated by the rapid growth of users, and the whole industry is striving for better and better user experiences. Companies that already operate online are trying to profile individuals as accurately as possible by collecting and processing data. Although online tools have made it easy to capture data on websites, the amount of data collected is unmanageable for most companies.

Real-time processing of large amounts of data

In today's world, it is increasingly important to be able to influence the behaviour of users of online services in real time, to offer them recommendations or to automatically analyze what problems users are experiencing, and to improve their user experience more quickly. All of this requires processing large amounts of data in real time.

What do we mean when we use the term big data? It is the amount of data that is simply too big to analyze and process with the traditional tools we use to work with data, such as relational database storage and data processing with algorithms that need to look at all the data at once. With big data come big challenges such as data capture, storage, analysis, search, sharing and finally data visualisation. Some of the most useful modern ways of analysing big data include:

Predictive analysis, which uses data analysis to forecast future events and trends,

identifying user behavioural patterns, where we detect deviations in user behaviour that have an impact on the business,

advanced analytics, which adds value from data.

Creating added value from data

It is this last point that we focus on in this article. We should always ask ourselves at the beginning, what is it that we want to achieve with big data and machine learning? There are as many answers as there are challenges, and we almost always want to solve different problems, improve business processes, optimise operations, speed up and improve the quality of our business decisions, increase the value of the company, etc. This is all true, but when we look at what we actually want to do, we can simplify it to saying that we want to take the data, send it through a black box and get some useful value on the other side.

Image 1: Black box Source: Medius Translation: Data > Black box > Usage

Our goal is to build a generic process for collecting large amounts of data, then through analysis and data processing to arrive at a useful value that can be offered to as many customers as possible in near real time.

Building context-specific data flows

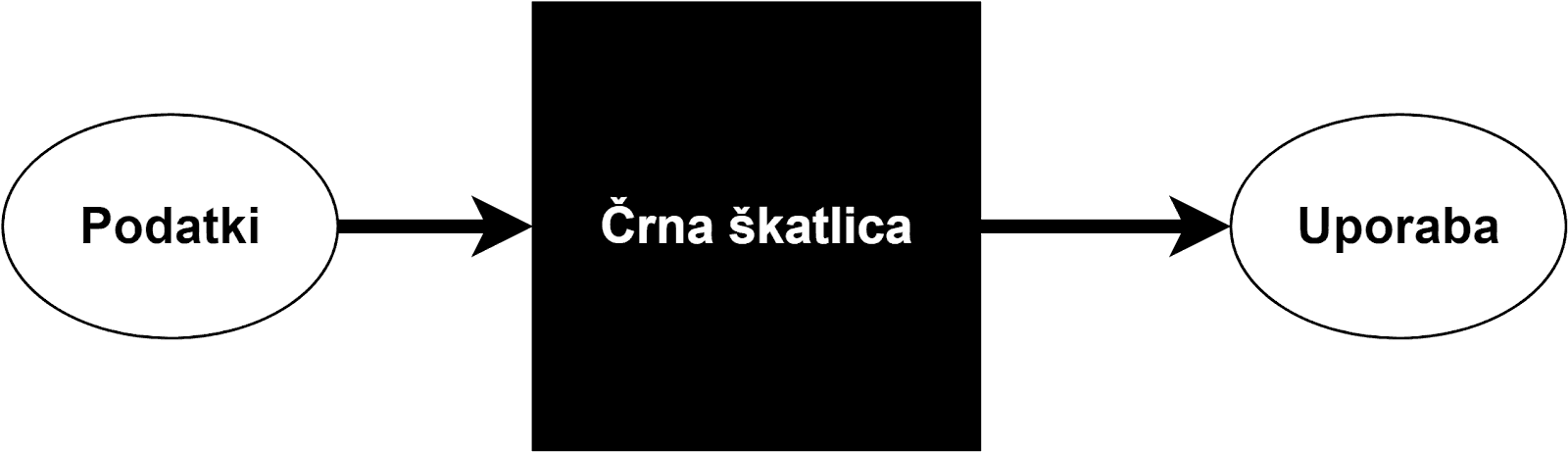

Based on our experience from previous projects dealing with big data and machine learning, we have chosen the approach of building context-dependent data streams. Context-dependent streams are an architectural approach where we route data through the system based on the context of data creation or data content. However, as the data itself travels through the system, it can be further enriched to maximise its usefulness to end-customers. Figure shows a simple architecture of context-dependent data flows (building on the previous black box figure):

Image 2: Simple architecture of context-dependent data flows. Source: Medius

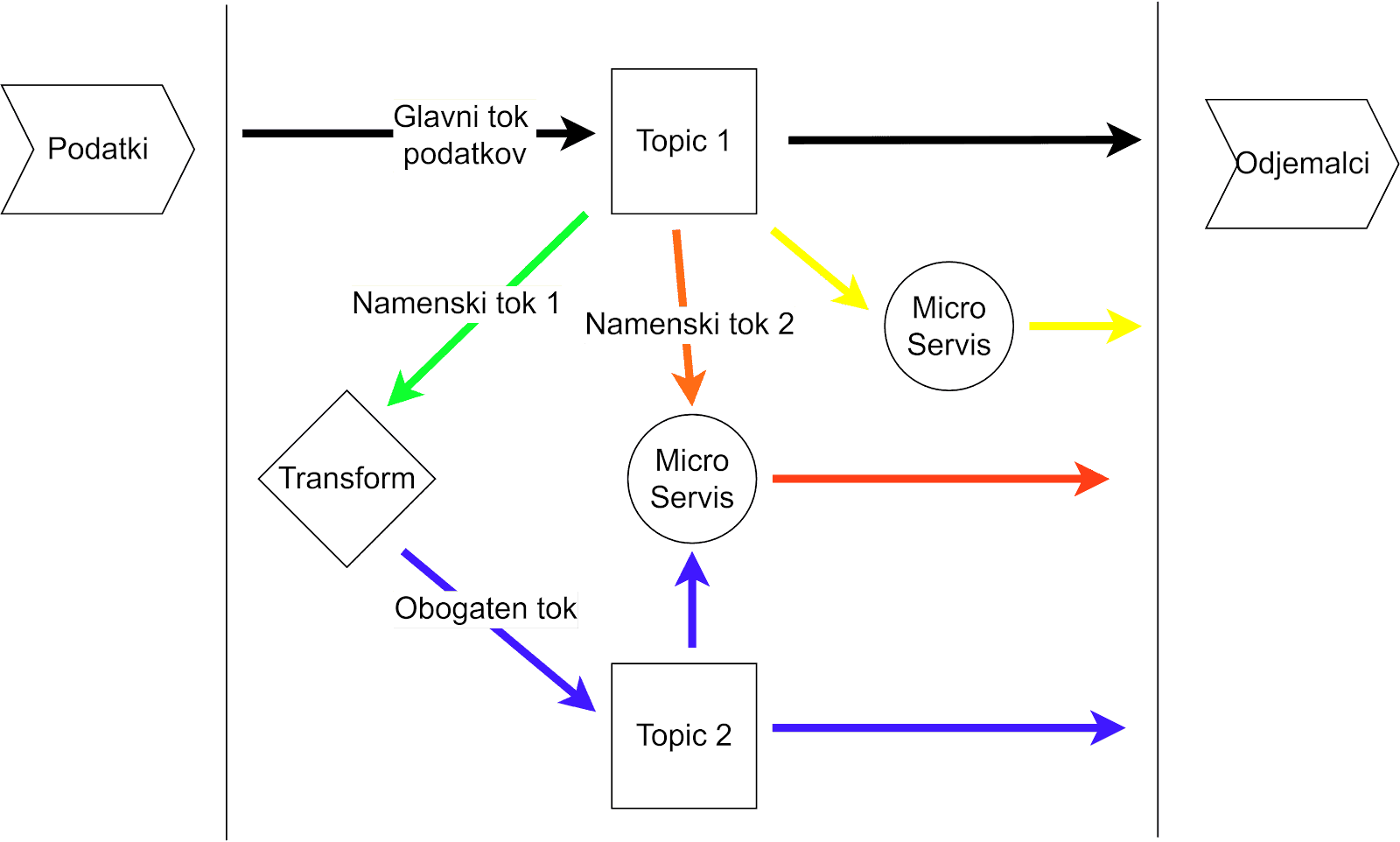

In the upper part we see the input data stream coming into the system and stored on Topic 1 (for the streaming platform we used Apache Kafka, which stores the data stream as a topic, which can be connected to by different listeners). Based on the main stream, we built three context-dependent streams that expose the data to external clients in different ways.

The yellow stream flows into a dedicated microservice that exposes part of the main stream data to external clients. The green stream flows to the Transform component, which further enriches (transforms) the data and stores it on Topic 2, which is also available to external clients. The orange stream flows to a dedicated micro-service which simultaneously takes the data from the enriched Topic 2, merges and further processes it and offers it to external clients. Although they process large amounts of data, all streams operate in near real time.

Custom data routing

As we can see, routing data through the system depends on the context and the desired use. Anyone can connect to a data stream and start using it at any time, and a new data stream can be built at any time without affecting existing streams and clients. It's a bit like creating new recipes over and over again for different occasions. This makes the data alive and useful immediately, which is especially important for machine learning.

Challenges in obtaining data

The biggest challenge in machine learning often lies in obtaining the initial data for analysis and for building the first models. To get the data, we need to coordinate with the system administrators who compile the data and then share it with us. Data transfer does not always go smoothly as we can quickly end up with incomplete data or a glitch during the transfer, so we always have to do several iterations of data retrieval. We may also realise later on in the analysis that we are missing some data and have to repeat the process again with the help of the administrators. All of this increases the development time and the number of people who work on the project.

By building context-dependent streams, however, this is avoided, as we simply connect to the streams and start processing at the same moment. We can add arbitrary summaries during the process, which further enrich our data by combining different streams or by retrieving information from external services. This gives data scientists direct access to the data, which they can then process as they wish.

Working with enriched data

By pre-contextualising the collected data, we create a better foundation for analysis and model learning later on, as the data is already combined or enriched. This significantly speeds up the extraction of features to be used for predictive and learning models. The features and the prediction are sent further down the data stream, where they can be checked later for correctness and annotated with the actual result. This allows us to see how good our model is, while at the same time providing us with a new training set that we can use to improve the model.

By reading the data directly from the stream, we create the conditions for incremental learning (online learning). As soon as we have the actual value of the features, we can use that information to train the model and make it better in real time. This allows the model to adapt quickly and continuously to changes in the available data.

Continuous improvement or incremental learning is one of the main advantages of data-driven business.

Example of use

You have probably been in a situation while shopping online where a website has offered you a product that is supposed to be relevant for you. If the information was processed correctly, you really needed the product and may have even bought it. This is an example of how machine learning can be integrated into the user experience. So how does it work? When a user comes to an online shop, a prediction is made and based on that, some products are offered to the user. When the user makes a purchase, the information is fed into the system and used to train the model. With the same information, it can verify whether the product they have bought is among the products we have predicted. This way it is clear if the model is good and if it is adapting correctly to the data it receives.

Simple installation and cost efficiency

Our proposal is to design the system using open source tools such as Kafka, Elastic Stack, Apache Spark and Quarkus. The basic idea is to have a simple initial set-up, low cost and the possibility to upgrade individual components. The set-up is possible even on a smaller segment of captured data and data processing, and then upgraded according to needs and usability. Data can be extracted from a variety of (new) sources and the number of context-dependent streams and the number of clients using the system can be expanded. The initial cost is reduced and the possibilities for expansion are virtually unlimited. Building the architecture over time depends entirely on your needs and desires, rather than on the limitations of the system.

A brief description of the components used:

Kafka is a platform for distributed event streaming. It offers high throughput and is scalable.

Elastic Stack combines Elasticsearch, Kibano, Logstash and Beats. Logstash and Beats are used to send data to Elasticsearch, which stores the data and makes it quickly searchable, and Kibana is used to visualise the stored data.

Apache Spark enables data processing, and is primarily used for analysis and machine learning of large amounts of data.

Quarkus is software for developing java applications. It is primarily aimed at developing "Cloud Native" applications with a "Container First" approach.

Benefits of using context-dependent flows

The benefits of a data flow architecture that uses context-dependent flows are enormous. We can assign a use value to statistics that are tightly locked in one of our databases and then offer them up for use. A major advantage is that data can be offered for processing from the moment it is stored in the databases, i.e. in near real time, enabling extremely fast reactions in the system.

Data streams can even be used to support our business processes, e.g. if we are monitoring the order flow via an online shop, we can implement support for warehouses and the delivery service via order data. Thanks to this shortcut, we do not need to build two additional communication channels between the two systems. On the other hand, we have the possibility to enrich the data, because context-dependent flows allow us to combine and enrich data from different sources that are interconnected. We therefore expose the data to new consumers and at the same time facilitate the initial machine learning phase of data preparation. This particularly takes the burden off the administrators who would have to prepare the data.

Caution should be exercised when processing personal data that needs to be protected. Context-dependent streams allow us to locate and mask personal data in real time, while still offering the usable part of the data to users who connect to the data stream - to protect against unauthorised use of the data.

Data is gold and it is important to make the best of it. That is why, in today's information age, responsiveness to change is key. We are entering a machine learning era where accessing and processing the right data can mean the difference between business success and failure.

Here's to the future, here's to machine learning, here's to successful projects!